Project

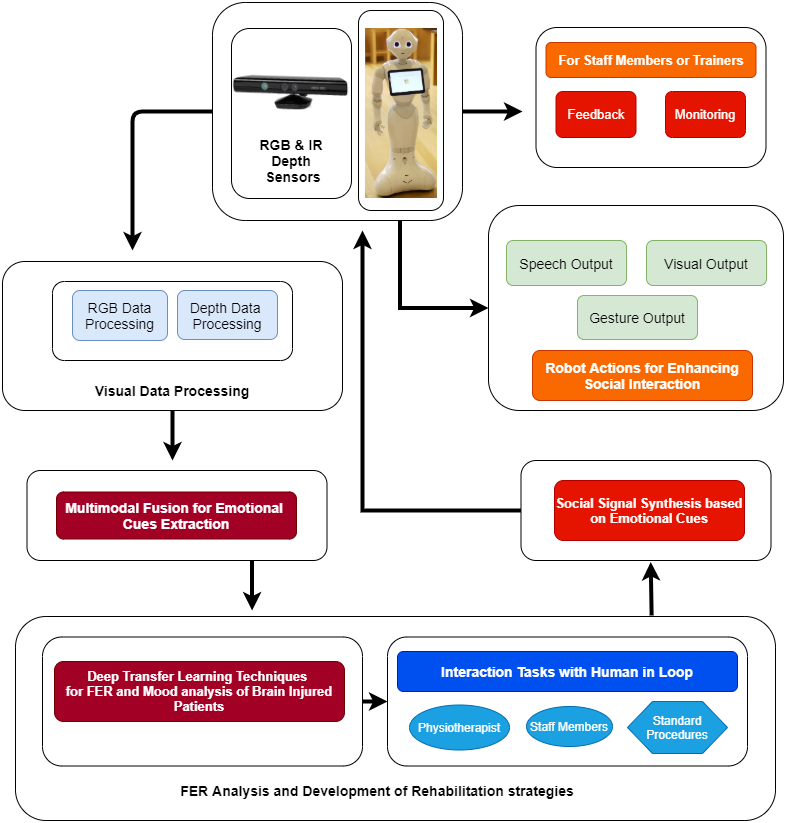

Affective states play a crucial in stroke rehabilitation but patients are often missing the possibility to express their emotions due to motor or cognitive challenges. It has earlier been shown that it is possible to recognize affective expression using computer vision. Unfortunately, these methods do not work with citizens that suffer from brain damage. Thus, we engaged in a project with a regional neurocenter to develop a suitable model for affect recognition that could be used for monitoring rehabilitation sessions and engage in motivational interactions with a social robot, the “Softbank’s Pepper Robot”.

Facial emotional recognition systems involves three basic steps. Firstly, it requires a face acquisition system that involves face localization and head pose estimation. This is followed by face alignment using geometrical landmark points such as apex of the nose or curve of the eyes. These facial landmarks are tracked and then faces are cropped according to the network input parameters. Before feeding the network a face quality assessment method is applied to ensure high quality of facial data to avoid computational power loss. In the second step, facial data extraction and representation is performed. Lastly, facial expressions are classified, both frame based and sequence based.

In our model we have employed a linear combination of Convolutional Neural Networks (CNN) and Long Short Term Memory networks (LSTM). CNNs are a specialized set of artificial neural networks with learnable weights and biases, that can be used to extract the facial features from the input faces of TBI patients. LSTM exploit the temporal relation on the basis of extracted features.

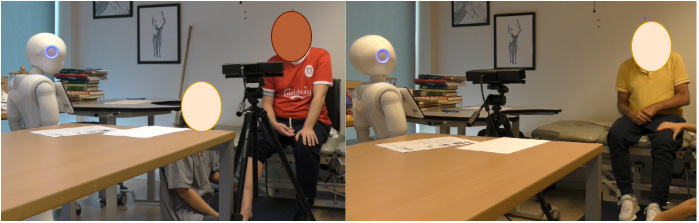

Within the human-robot interaction, the Pepper robot equipped with a deep-trained emotion recognition model is introduced in the neurological center. The study emphasizes the real therapeutic value for stroke rehabilitation supported with tools to provide assessment and feedback in the neurocenters.

Funding

This PhD is internally funded by Aalborg University.

Contact

PhD-Fellow: Chaudhary Muhammad Aqdus Ilyas

Mail: cmai@create.aau.dk

Supervisor: Kamal Nasrollahi

Mail: kn@create.aau.dk

Supervisor: Matthias Rehm

Mail: matthias@create.aau.dk