Agglomerative Token Clustering

Joakim Bruslund Haurum, Sergio Escalera, Graham W. Taylor, and Thomas B. Moeslund

ECCV 2024

Paper (ArXiv) | Code | Models

Vision Transformers (ViTs) have been utilized for a wide variety of computer vision tasks such as image classification,

synthesis, segmentation and more with great success. ViTs can process variable length input sequences, even allowing for the sequences to be modified throughout the network. This gives ViTs stronger expressive powers than CNNs , but comes at a computational cost due to the quadratic scaling of the self-attention computation. Token reduction has shown to be a promising subfield which directly decreases model complexity by reducing the input sequence through pruning or merging.

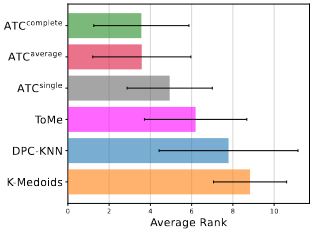

We present a novel merging-based token reduction method, Agglomerative Token Clustering (ATC), which outperforms all prior merging-based and pruning-based token reduction methods on both classification tasks and dense computer vision tasks such as image synthesis and object detection & segmentation, and achieve comparable performance without any fine-tuning, i.e. off-the-shelf.

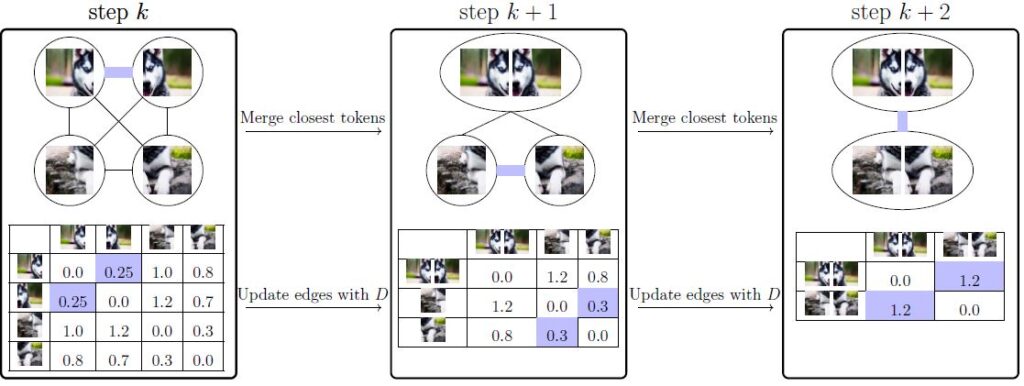

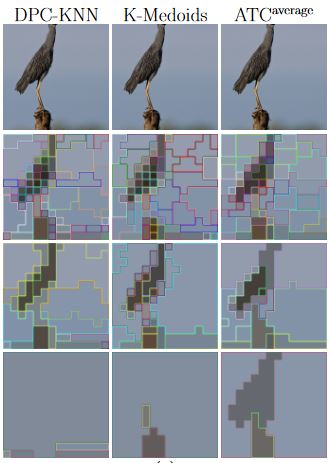

TC is motivated by the observation that prior merging-based methods such as K-Medoids, DPC-KNN, and ToMe all perform merging globally, which may lead to redundant clusters. Our hypothesis is that hierarchically merging similar, and thus redundant, observations results in more informative groupings. A natural and robust methodology for including this notion into token reduction is via agglomerative clustering [15], where tokens are iteratively clustered in a bottom-up hierarchical way,

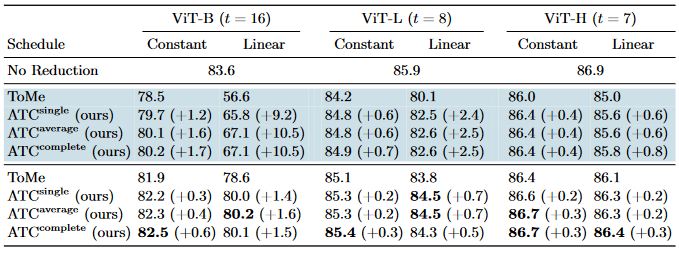

We find that ATC consistently outperforms prior hard-merging methods (as well as pruning-based methods) across several classification tasks, as well as backbone pretraining methods.

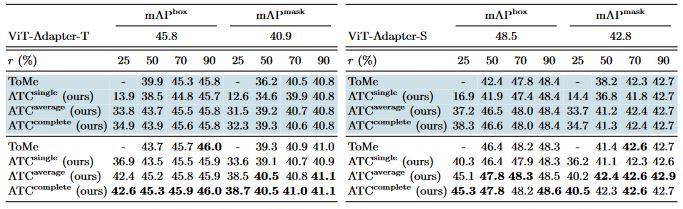

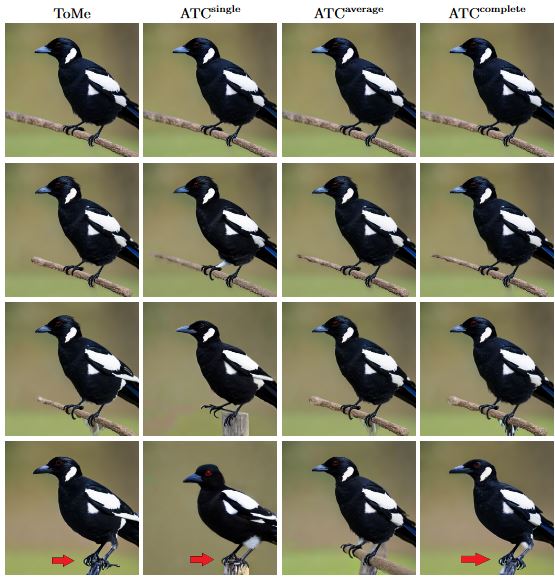

We also find that ATC outperforms all prior token reduction methods for Object Detection and Segmentation on the COCO 2017 dataset, as well as imporving the quality of the Stable Diffusion generations compared to the ToMe method, when reducing the number of tokens.

Citation

@InProceedings{Haurum_2024_ECCV,

author = {Joakim Bruslund Haurum and Sergio Escalera and Graham W. Taylor and Thomas B. Moeslund},

title = {Agglomerative Token Clustering},

booktitle = {Computer Vision — ECCV 2024},

month = {October},

year = {2024}, }