The list of papers accepted for presentation at the Joint Workshop on Marine Vision 2025. The papers will be included in the official ICCV 2025 Workshop Proceedings. The list of papers is in random order.

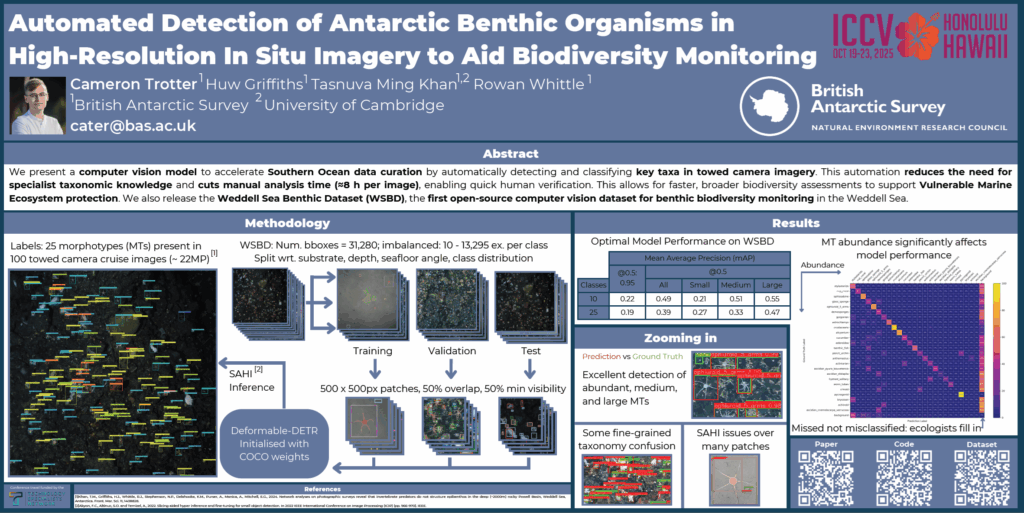

Automated Detection of Antarctic Benthic Organisms in High-Resolution In Situ Imagery to Aid Biodiversity Monitoring

Cameron Trotter, Huw Griffiths, Tasnuva Ming Khan, Rowan Whittle

Abstract

Monitoring benthic biodiversity in Antarctica is vital for understanding ecological change in response to climate-driven pressures. This work is typically performed using high-resolution imagery captured in situ, though manual annotation of such data remains laborious and specialised, impeding large-scale analysis. We present a tailored object detection framework for identifying and classifying Antarctic benthic organisms in high-resolution towed camera imagery, alongside the first public computer vision dataset for benthic biodiversity monitoring in the Weddell Sea. Our approach addresses key challenges associated with marine ecological imagery, including limited annotated data, variable object sizes, and complex seafloor structure. The proposed framework combines resolution-preserving patching, spatial data augmentation, fine-tuning, and postprocessing via SAHI. We benchmark multiple object detection architectures and demonstrate strong performance in detecting medium and large organisms across 25 fine-grained morphotypes, significantly more than other works in this area. Detection of small and rare taxa remains a challenge, reflecting limitations in current detection architectures. Our framework provides a scalable foundation for future machine-assisted in situ benthic biodiversity monitoring research.

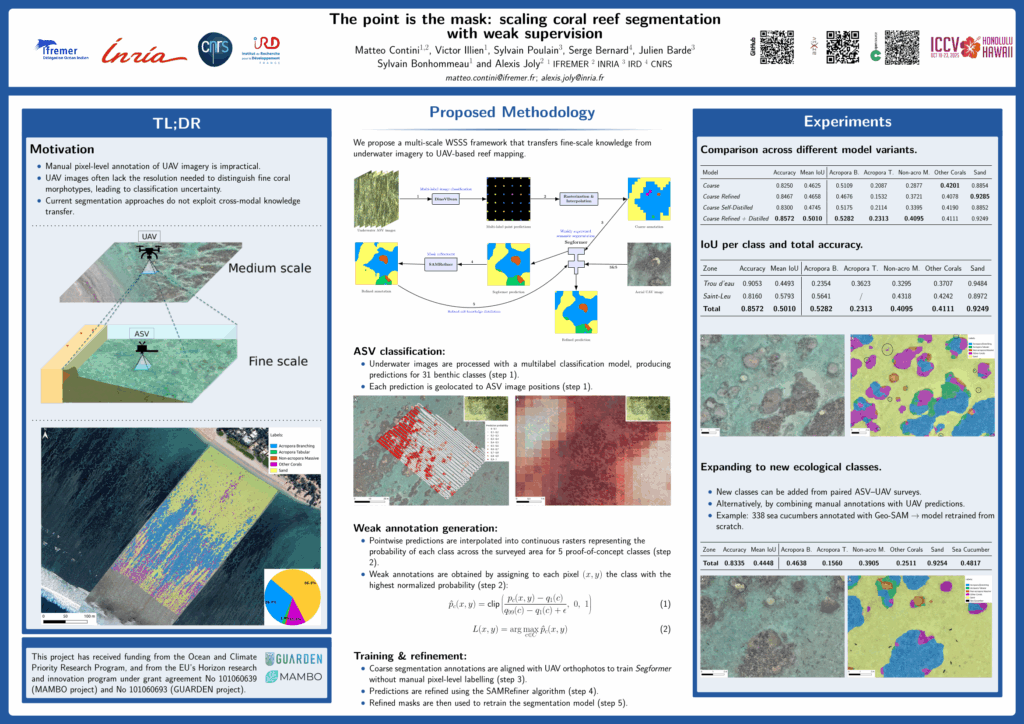

The Point is the Mask: Scaling Coral Reef Segmentation with Weak Supervision

Matteo Contini, Victor Illien, Sylvain Poulain, Serge Bernard, Julien Barde, Sylvain Bonhommeau, Alexis Joly

Abstract

Obtaining pixel-level annotations over large spatial extents remains a major bottleneck for deploying machine learning in marine remote sensing. Here we present a multi-scale weakly supervised semantic segmentation framework that enables training high-resolution segmentation models from dense, classification-based outputs. Our method uses fine-scale predictions from underwater images to identify multiple benthic classes. These predictions are spatially interpolated and converted into coarse masks, which serve as supervision for training a segmentation model on drone-based aerial imagery. A self-distillation step, applied after mask refinement, further improves spatial accuracy without requiring additional annotations. We demonstrate the approach on coral reef imagery, enabling large-area segmentation of coral morphotypes and illustrating its flexibility in integrating new classes. This study presents a scalable, cost-effective methodology for high-resolution reef monitoring, combining low cost data collection, weakly supervised deep learning and multi-scale remote sensing. By enabling accurate segmentation from aerial data with minimal annotation effort, the method contributes to improved coral reef assessment and supports large-scale ecological monitoring and conservation planning.

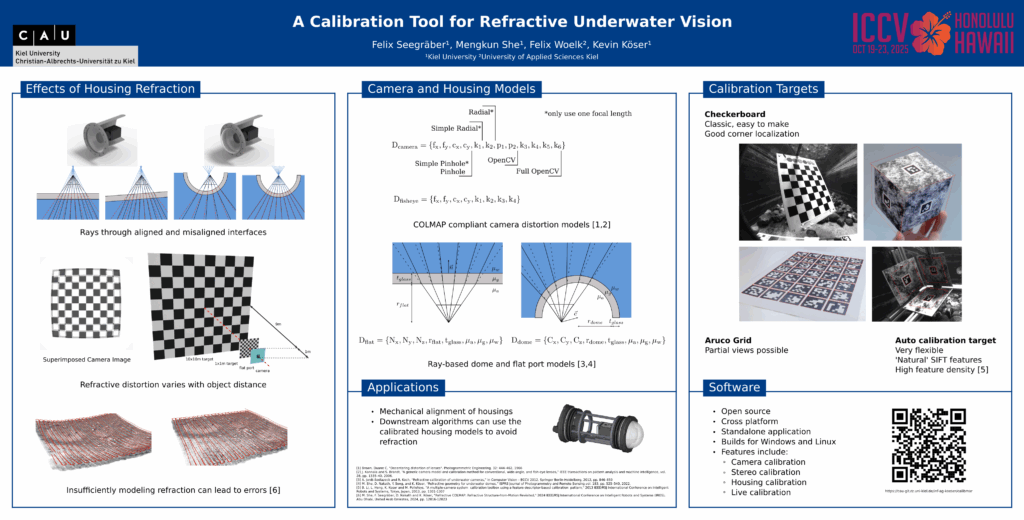

A Calibration Tool for Refractive Underwater Vision

Felix Seegräber, Mengkun She, Felix Woelk, Kevin Köser

Abstract

Many underwater applications rely on vision sensors and require proper camera calibration, i.e. knowing the incoming light ray for each pixel in the image. While for the ideal pinhole camera model all viewing rays intersect in a single 3D point, underwater cameras suffer from – possibly multiple – refractions of light rays at the interfaces of water, glass and air. These changes of direction depend on the position and orientation of the camera inside the water-proof housing, as well as on the shape and properties of the optical window, the port, itself. In recent years explicit models for underwater vision behind common ports such as flat or dome port have been proposed, but the underwater community is still lacking a calibration tool which can determine port parameters through refractive calibration. With this work we provide the first open source implementation of an underwater refractive camera calibration toolbox. It allows end-to-end calibration of underwater vision systems, including camera, stereo and housing calibration for systems with dome or flat ports. The implementation is verified using rendered datasets and real-world experiments.

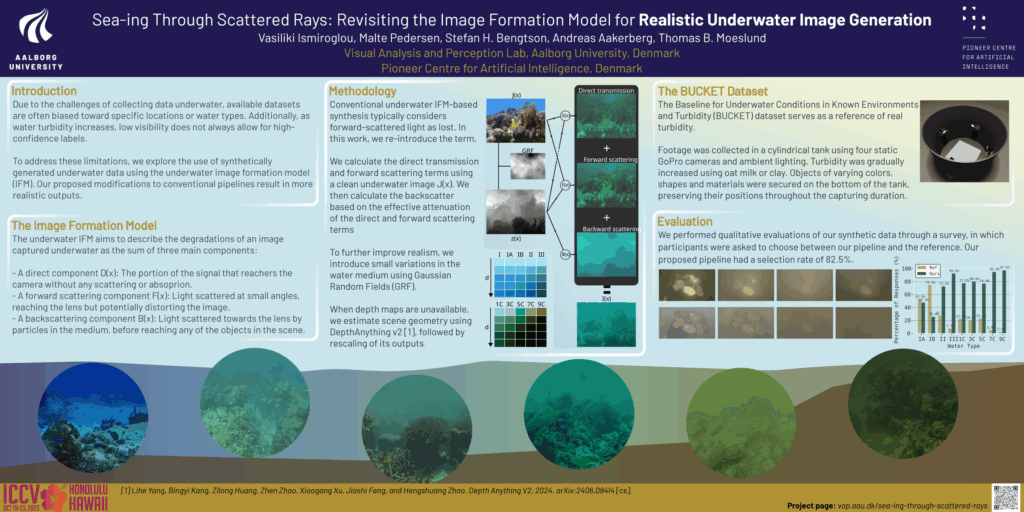

Sea-ing Through Scattered Rays: Revisiting the Image Formation Model for Realistic Underwater Image Generation

Vasiliki Ismiroglou, Malte Pedersen, Stefan Hein Bengtson, Andreas Aakerberg, Thomas B. Moeslund

Abstract

In recent years, the underwater image formation model has found extensive use in synthetic underwater data generation. While many approaches focus on scenes primarily affected by discoloration, they often overlook the model’s ability to capture the complex, distance-dependent visibility loss present in highly turbid environments. In this work, we propose an improved synthetic data generation pipeline that includes the commonly omitted forward scattering term, while also considering a non-uniform medium. Additionally, we collected the BUCKET dataset under controlled turbidity conditions to acquire real turbid footage with corresponding reference images. Our results demonstrate qualitative improvements over the reference model, particularly under increasing turbidity, with an 82.5% selection rate by survey participants. Data and code can be accessed through the project page: https://vap.aau.dk/sea-ing-through-scattered-rays/

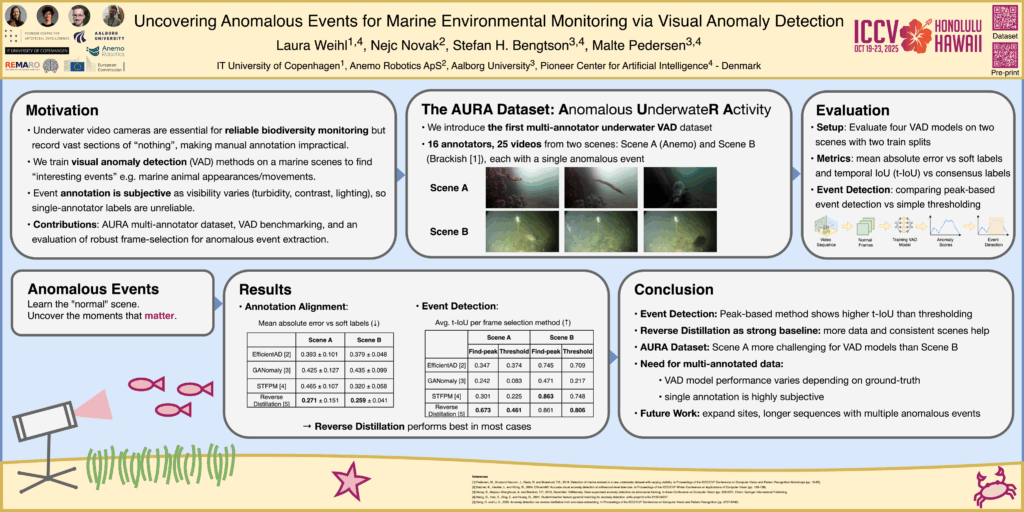

Uncovering Anomalous Events for Marine Environmental Monitoring via Visual Anomaly Detection

Laura Weihl, Stefan Hein Bengtson, Nejc Novak, Malte Pedersen

Abstract

Underwater video monitoring is a promising strategy for assessing marine biodiversity, but the vast volume of uneventful footage makes manual inspection highly impractical. In this work, we explore the use of visual anomaly detection (VAD) based on deep neural networks to automatically identify interesting or anomalous events. We introduce AURA, the first multi-annotator benchmark dataset for underwater VAD and evaluate four state-of-the-art VAD models across two marine scenes. We demonstrate the importance of robust frame selection strategies to extract meaningful video segments. Our comparison against multiple annotators reveals that VAD performance of current models varies dramatically and is highly sensitive to both the amount of training data and the variability in visual content that defines “normal” scenes. Our results highlight the value of soft and consensus labels and offer a practical approach for supporting scientific exploration and scalable biodiversity monitoring.

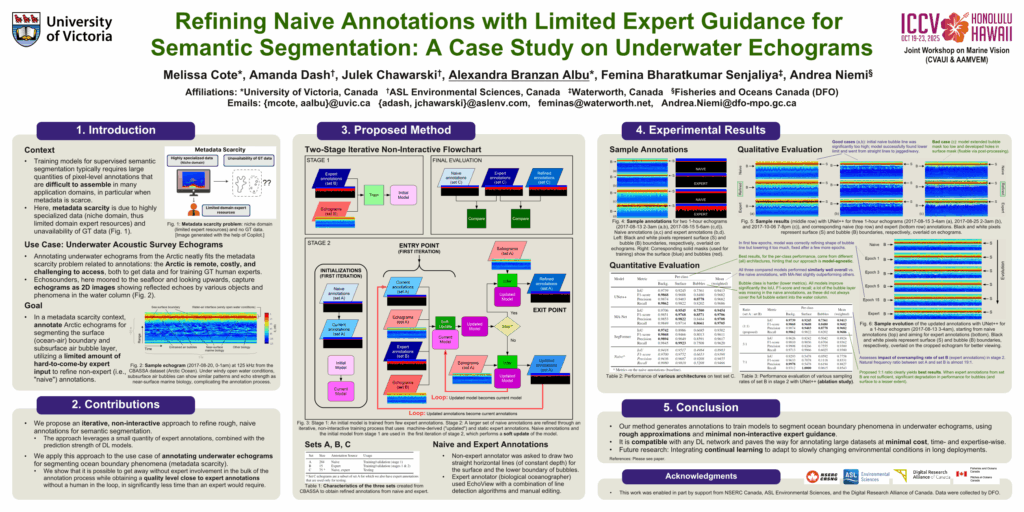

Refining Naive Annotations with Limited Expert Guidance for Semantic Segmentation: A Case Study on Underwater Echograms

Melissa Cote, Amanda Dash, Julek Chawarski, Alexandra Branzan Albu, Femina Bharatkumar Senjaliya, Andrea Niemi

Abstract

Training models for supervised semantic segmentation typically requires large quantities of pixel-level annotations that are difficult to assemble in many application domains, in particular when meta-data is scarce. In the context of underwater echogram analysis for environmental monitoring, meta-data scarcity is manifested by a lack of ground truth and limited domain expert resources, hindering standard data annotation processes. We propose an iterative, non-interactive annotation approach that allows us to obtain large quantities of echogram annotations using minimal expert guidance. In a two-stage process, a segmentation neural network is first purposely overfitted to a very small expertly annotated set, and is then used to iteratively refine a larger set of rough, naive annotations. Experiments on the Cape Bathurst Arctic Sea Surface Acoustics (CBASSA) dataset showcase our method’s capability to generate annotations for the sea surface and subsurface entrained air bubbles that approach expert quality level (within 5.5 p.p. for the intersection over union and 3 p.p. for the F1-score), starting from simple non-expert lines obtained at a fraction of the time required by experts. They also show that our method is compatible with both convolutional- and transformer-based neural networks, and pave the way for annotating large datasets resulting from long/continuous deployments for underwater environmental monitoring, at minimal cost.

DebrisVision: Bridging the Synthetic-to-Real Gap for Enhanced Underwater Debris Analysis

Sivaji Retta, Sai Manikanta Eswar Machara, Iyyakutti Iyappan Ganapathi, Divya Velayudhan, Naoufel Werghi

Abstract

Underwater debris poses severe environmental threats; however, its automated detection and segmentation remain challenging due to the lack of large-scale, precisely annotated datasets. To bridge this gap, we introduce DebrisVision, a comprehensive dataset designed to advance AI-driven underwater debris analysis. It includes 9,430 real-world underwater images and 15,570 synthetically generated images created using text-to-image diffusion models. The dataset provides multi-modal annotations, bounding boxes, segmentation masks, depth maps, and textual descriptions, leveraging vision-language foundation models to ensure adaptability. Additionally, an unpaired image translation framework refines synthetic images to match real-world underwater conditions better, improving model generalizability.

Models trained on DebrisVision show significant performance gains. When trained on the combined dataset of synthetic and real images, YOLOv8 achieves a 2.29 times increase in detection mAP50 and a 3.03 times increase in segmentation mAP50; and YOLO11 achieves a 2.01 times increase in detection mAP50 and a 2.62 times increase in segmentation mAP50. DebrisVision also reduces the synthetic-to-real domain gap by 20%, enhancing performance in turbid conditions. By open-sourcing DebrisVision, we equip researchers and engineers with a scalable and diverse dataset to enhance autonomous marine cleanup, robotic navigation, and ecological monitoring. This initiative ultimately contributes to global efforts to combat ocean pollution.

The Coralscapes Dataset: Semantic Scene Understanding in Coral Reefs

Jonathan Sauder, Viktor Domazetoski, Guilhem Banc-Prandi, Gabriela Perna, Anders Meibom, Devis Tuia

Abstract

Coral reefs are declining worldwide due to climate change and local stressors. To inform effective conservation or restoration, monitoring at the highest possible spatial and temporal resolution is necessary. Conventional coral reef surveying methods are limited in scalability due to their reliance on expert labor time, motivating the use of computer vision tools to automate the identification and abundance estimation of live corals from images. However, the design and evaluation of such tools has been impeded by the lack of large high quality datasets. We release the Coralscapes dataset, the first general-purpose dense semantic segmentation dataset for coral reefs, covering 2075 images, 39 benthic classes, and 174k segmentation masks annotated by experts. Coralscapes has a similar scope and the same structure as the widely used Cityscapes dataset for urban scene segmentation, allowing benchmarking of semantic segmentation models in a new challenging domain which requires expert knowledge to annotate. We benchmark a wide range of semantic segmentation models, and find that transfer learning from Coralscapes to existing smaller datasets consistently leads to state-of-the-art performance. Coralscapes will catalyze research on efficient, scalable, and standardized coral reef surveying methods based on computer vision, and holds the potential to streamline the development of underwater ecological robotics.

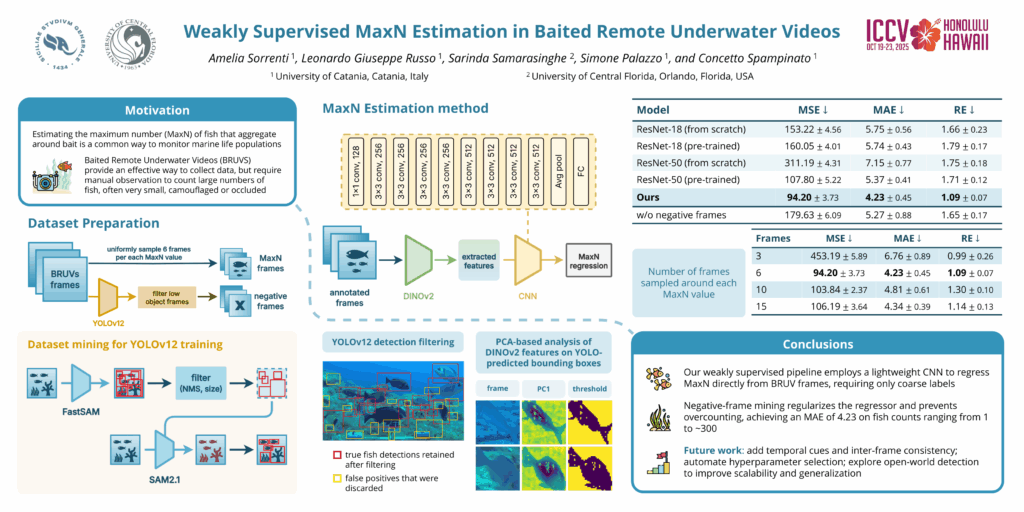

Weakly Supervised maxN Estimation in Baited Remote Underwater Video

Amelia Sorrenti, Leonardo Giuseppe Russo, Sarinda Samarasinghe, Simone Palazzo, Concetto Spampinato

Abstract

Monitoring marine biodiversity is crucial for ecological research and conservation efforts, particularly in regions where traditional survey methods are invasive or labor intensive. Knowing the peak abundance of each species aids marine biologists in assessing ecosystem health. In this paper, we present a framework for estimating the Maximum Number (MaxN) of different fish species from Baited Remote Underwater Video (BRUV) footage from the Tyrrhenian Sea. Our data for this task is weakly labeled, providing only the MaxN count for each species and a single corresponding timestamp per video. Our proposed solution consists of two stages. First, we employ a state-of-the-art object detector to perform negative frame mining, efficiently identifying frames with no fish to supplement our sparse positive 015 labels. Second, we leverage the powerful feature extraction capabilities of the DINOv2 vision transformer, feeding these features into a lightweight convolutional model to estimate the MaxN count. This approach circumvents the need for expensive bounding-box annotations, offering a scalable and cost-effective solution for large-scale marine monitoring. Our method achieves a Mean Absolute Error (MAE) of 4.23 on fish counts ranging from 1 to about 300, corresponding to an average relative error of 1%.

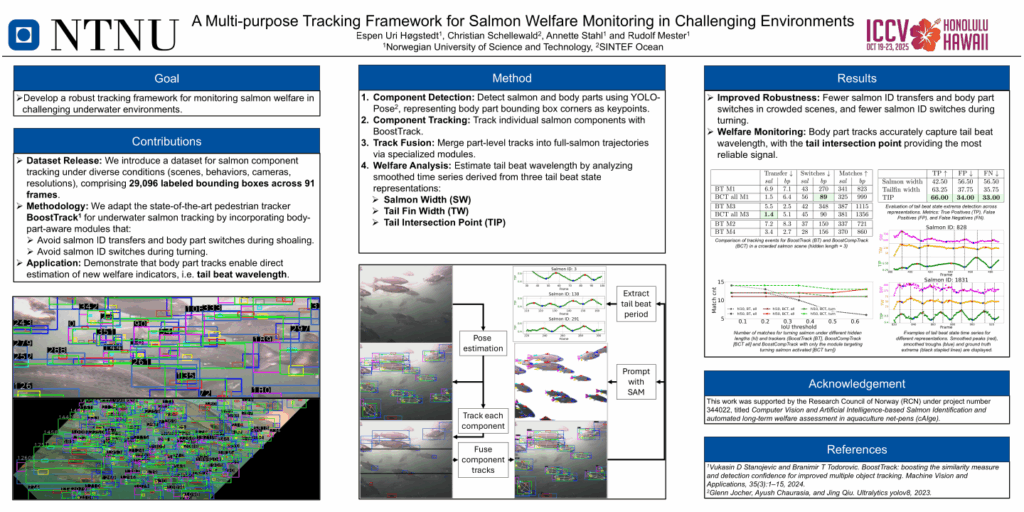

A Multi-purpose Tracking Framework for Salmon Welfare Monitoring in Challenging Environments

Espen Berntzen Høgstedt, Christian Schellewald, Annette Stahl, Rudolf Mester

Abstract

Computer Vision (CV) based continuous, automated and precise salmon welfare monitoring is a key step toward reduced salmon mortality and improved salmon welfare in industrial aquaculture net pens. Available CV methods for determining welfare indicators focus on single indicators, and rely on object detectors and trackers from other application areas to aid their welfare indicator calculation algorithm. This comes with a high resource demand for real-world applications, since each indicator must be calculated separately. In addition, the methods are vulnerable to difficulties in underwater salmon scenes, such as object occlusion, similar object appearance, and similar object motion. To address these challenges, we propose a flexible tracking framework that uses a pose estimation network to extract bounding boxes around salmon and their corresponding body parts, and exploits information about the body parts, through specialized modules, to tackle challenges specific to underwater salmon scenes. Subsequently, the high-detail body part tracks are employed to calculate welfare indicators. To evaluate the performance of our method, we construct two novel datasets assessing two salmon tracking challenges: salmon ID transfers in crowded scenes and salmon ID switches during turning. Our method outperforms the current state-of-the-art pedestrian tracker, BoostTrack, for both salmon tracking challenges. Additionally, we create a dataset for calculating salmon tail beat wavelength, demonstrating that our body part tracking method is particularly well-suited for automated welfare monitoring based on tail beat analysis.

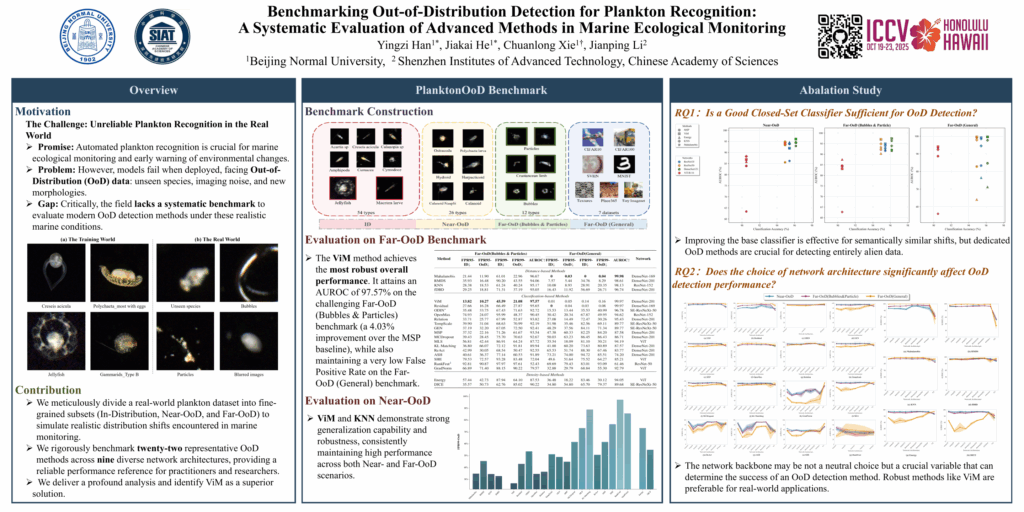

Benchmarking Out-of-Distribution Detection for Plankton Recognition: A Systematic Evaluation of Advanced Methods in Marine Ecological Monitoring

Yingzi Han, Jiakai He, Chuanlong Xie, Jianping Li

Abstract

Automated plankton recognition models face significant challenges during real-world deployment due to distribution shifts (Out-of-Distribution, OoD) between training and test data. This stems from plankton’s complex morphologies, vast species diversity, and the continuous discovery of novel species, which leads to unpredictable errors during inference. Despite rapid advancements in OoD detection methods in recent years, the field of plankton recognition still lacks a systematic integration of the latest computer vision developments and a unified benchmark for large-scale evaluation. To address this, this paper meticulously designed a series of OoD benchmarks simulating various distribution shift scenarios based on the DYB-PlanktonNet dataset, and systematically evaluated twenty-two OoD detection methods. Extensive experimental results demonstrate that the ViM method significantly outperforms other approaches in our constructed benchmarks, particularly excelling in Far-OoD scenarios with substantial improvements in key metrics. This comprehensive evaluation not only provides a reliable reference for algorithm selection in automated plankton recognition but also lays a solid foundation for future research in plankton OoD detection. To our knowledge, this study marks the first large-scale, systematic evaluation and analysis of Out-of-Distribution data detection methods in plankton recognition.

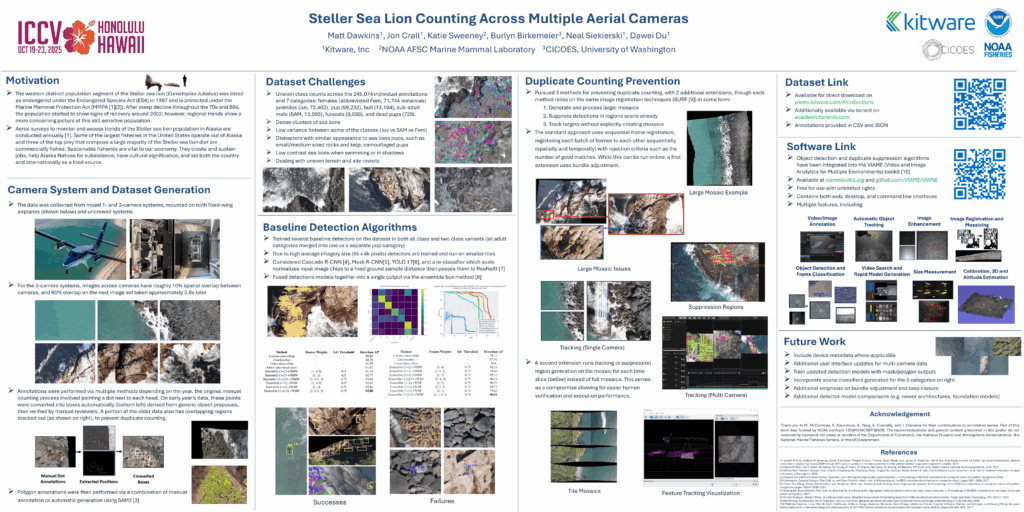

Steller Sea Lion Counting Across Multiple Aerial Cameras

Matthew Dawkins, Jon Crall, Katie Sweeney, Burlyn Birkemeier, Neal Siekierski, Dawei Du

Abstract

Steller sea lions, who tend to live on the rocky shores and coastal waters of the subarctic, have faced declining populations since the 1970s and were listed as endangered in 1997 within distinct western population centers. Monitoring their populations is therefore crucial to future management and setting government regulations. In this paper, we present a novel dataset for sea lion detection and identification across multiple cameras, which includes different labels for age and sex sub-classification, alongside related distractor animal species such as fur seals. The data was collected from mixed 1- and 3-camera systems, mounted on both fixed-wing airplanes and unmanned systems. The performance of several algorithms is compared on this dataset, including novel detector ensembles and multi-sensor temporal tracking methods, which were developed in order to produce accurate population counts.

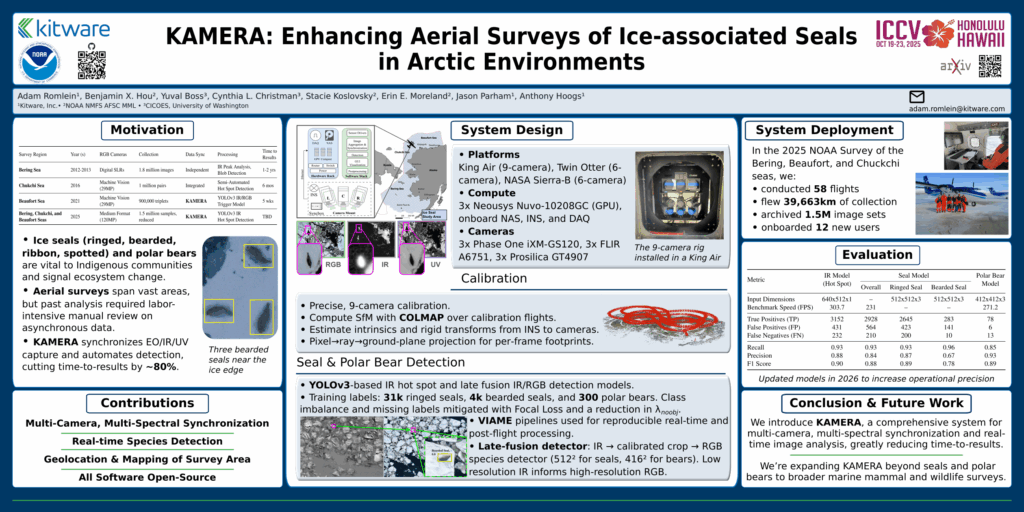

KAMERA: Enhancing Aerial Surveys of Ice-associated Seals in Arctic Environments

Adam Romlein, Benjamin X. Hou, Yuval Boss, Cynthia L. Christman, Stacie Koslovsky, Erin E. Moreland, Jason Parham, Anthony Hoogs

Abstract

We introduce KAMERA, a comprehensive system for multi-camera, multi-spectral, real-time camera synchronization, control and image analysis. Utilized in aerial surveys for ice-associated seals in the Bering, Chukchi, and Beaufort seas around Alaska, KAMERA provides up to an 80% reduction in dataset processing time over previous methods. Our rigorous calibration and hardware synchronization enable using multiple spectra for object detection. All data collected are annotated with metadata, easily referenced at a later time. All imagery and animal detections from a survey are mapped into a world plane for accurate estimates of the area surveyed and quick assessment of survey results. We hope KAMERA will inspire other mapping & detection efforts in the scientific community, with all software, models, and schematics fully open-sourced.

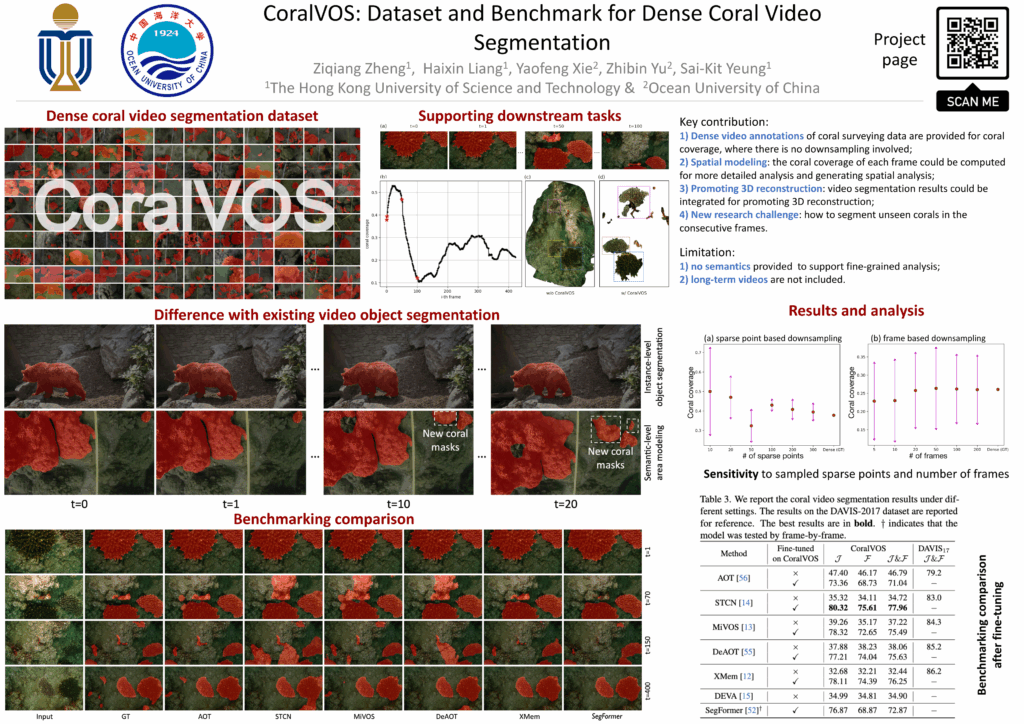

CoralVOS: Dataset and Benchmark for Dense Coral Video Segmentation

Zheng Ziqiang, Liang Haixin, Yaofeng Xie, Zhibin Yu, Sai-Kit Yeung

Abstract

Coral reefs formulate the most valuable and productive marine ecosystems, providing habitat for a wide range of marine species. Coral reef surveying and analysis are currently confined to coral experts who invest substantial efforts in generating comprehensive and reliable reports (e.g., coral coverage, population, spatial distribution, etc.), from the collected survey data. However, performing dense coral analysis based on manual efforts is significantly time-consuming and expensive, the existing coral analysis algorithms compromise and opt for performing down-sampling and then only conducting sparse point-based coral analysis within selected frames from the whole video. However, such down-sampling will inevitably introduce the estimation bias (under-/over- estimation) or even lead to wrong results. To address this issue, we propose to perform dense coral video segmentation, with no down-sampling involved during the whole analysis procedure. Through video object segmentation, we could generate more reliable and in-depth coral analysis than the existing coral reef analytical approaches. To boost such dense coral analysis, we propose a large-scale coral video segmentation dataset: CoralVOS as demonstrated in Fig. 1. To the best of our knowledge, our CoralVOS is the first dataset to support dense coral video segmentation. We perform experiments on our CoralVOS dataset, including 6 recent state-of-the-art video object segmentation (VOS) algorithms. We fine-tuned these VOS algorithms on our CoralVOS dataset and achieved observable performance improvements. The results show that the coral reef analysis will greatly benefit from the coral video segmentation and there is still great potential for further promoting the segmentation accuracy. Finally, we demonstrate that the proposed CoralVOS could promote coral population estimation, spatial coral reef modeling, and 3D coral reef reconstruction.